Difference between Soft and Hard Computing ?

Hard computing based on binary logic, crisp systems, numerical analysis and crisp software. It can deal only with exact data.

Soft computing based on fuzzy logic, neural nets and probabilistic reasoning i.e it is tolerant of imprecision, uncertainty, partial truth, and approximation. It may deal with noisy data.

What are two components of Risk?

Exposure and uncertainty.

What is the impact of Bias on the model ?

A- Models that exhibit small variance and high bias underfit the truth target. Models that exhibit high variance and low bias overfit the truth target. Note that if your target truth is highly nonlinear, and you select a linear model to approximate it, then you’re introducing a bias resulting from the linear model’s inability to capture nonlinearity. In fact, your linear model is underfitting the nonlinear target function over the training set. Likewise, if your target truth is linear, and you select a nonlinear model to approximate it, then you’re introducing a bias resulting from the nonlinear model’s inability to be linear where it needs to be. In fact, the nonlinear model is overfitting the linear target function over the training set.

What are Shrinkage Methods?

Shrinkage is a general technique to improve a least-squares estimator which consists in reducing the variance by adding constraints on the value of coefficients.

What is the method to reduce Bias?

Resampling…:)

What is the difference between R and R square?

The coefficient of correlation is “R” value which is given in the summary table in the Regression output. R square is also called coefficient of determination. Multiply R times R to get the R square value. In other words Coefficient of Determination is the square of Coefficient of Correlation.

R square or coefficient of determination, denoted R2 or r2 and pronounced “R squared” shows percentage variation in y which is explained by all the x variables together. Higher the better. It is always between 0 and 1. It can never be negative – since it is a squared value. Every time you add a data point in regression analysis, R2 will increase. R2 never decreases. Therefore, the more points you add, the better the regression will seem to “fit” your data. It can be explained in terms of:

Variance, formulaically.

and as Correlation coefficient square, this is coefficient.

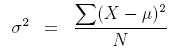

What is variance?

The variance (σ2), is defined as the sum of the squared distances of each term in the distribution from the mean (μ), divided by the number of terms in the distribution (N).

X: individual data point

u: mean of data points

N: total # of data points

What is Covariance?

Covariance and correlation describe how two variables are related.

- Variables are positively related if they move in the same direction.

- Variables are inversely related if they move in opposite directions.

x = the independent variable

y = the dependent variable

n = number of data points in the sample

= the mean of the independent variable x

= the mean of the independent variable x

= the mean of the dependent variable y

= the mean of the dependent variable y

What is the correlation?

Correlation is another way to determine how two variables are related.

The correlation measurement, called a correlation coefficient, will always take on a value between 1 and – 1:

r(x,y) = correlation of the variables x and y

COV(x, y) = covariance of the variables x and y

sx = sample standard deviation of the random variable x

sy = sample standard deviation of the random variable y

difference between R square and rmse in linear regression?

The root means squared error is the square root thus RMSE=MSE−−−−−√RMSE=MSE.

SSE=∑ni=1(yi−y^i)2)

RSquare is coefficient of determination

R2=1−(SSE/TSS)

What is binning

Binning means the process of transforming a numeric characteristic into a categorical one as well as re-grouping and consolidating categorical characteristics.

Why binning is required

- Increases scorecard stability: some attribute values will lead to instability if not grouped together.

- Improves quality: Predictive strengths will increase scorecard accuracy.

- Prevents scorecard impairment otherwise possible due to seldom reversal patterns and extreme values.

- Prevents overfitting.

Automatic binning-

The most widely used automatic binning algorithm is Chi-merge. Chi-merge is a process of dividing into intervals (bins) in the way that neighboring bins will differ from each other as much as possible in the ratio of “Good” and “Bad” records in them. WOE shows the performance of binning.

What is convergence tolerance?

During the optimization, we use the gradient to estimate the minima, but in this process, it becomes difficult to find the minimum learning rate at which we learn for this we need some idea and that is of convergence tolerance. We can say that we have converged when |f(xt)−f(xt−1)|<ϵ|f(xt)−f(xt−1)|<ϵ for some ϵ>0ϵ>0. This ϵϵ is our convergence tolerance.

Refer: Nice description